Time-domain Gibbs sampling: From bits to inflationary gravitational waves

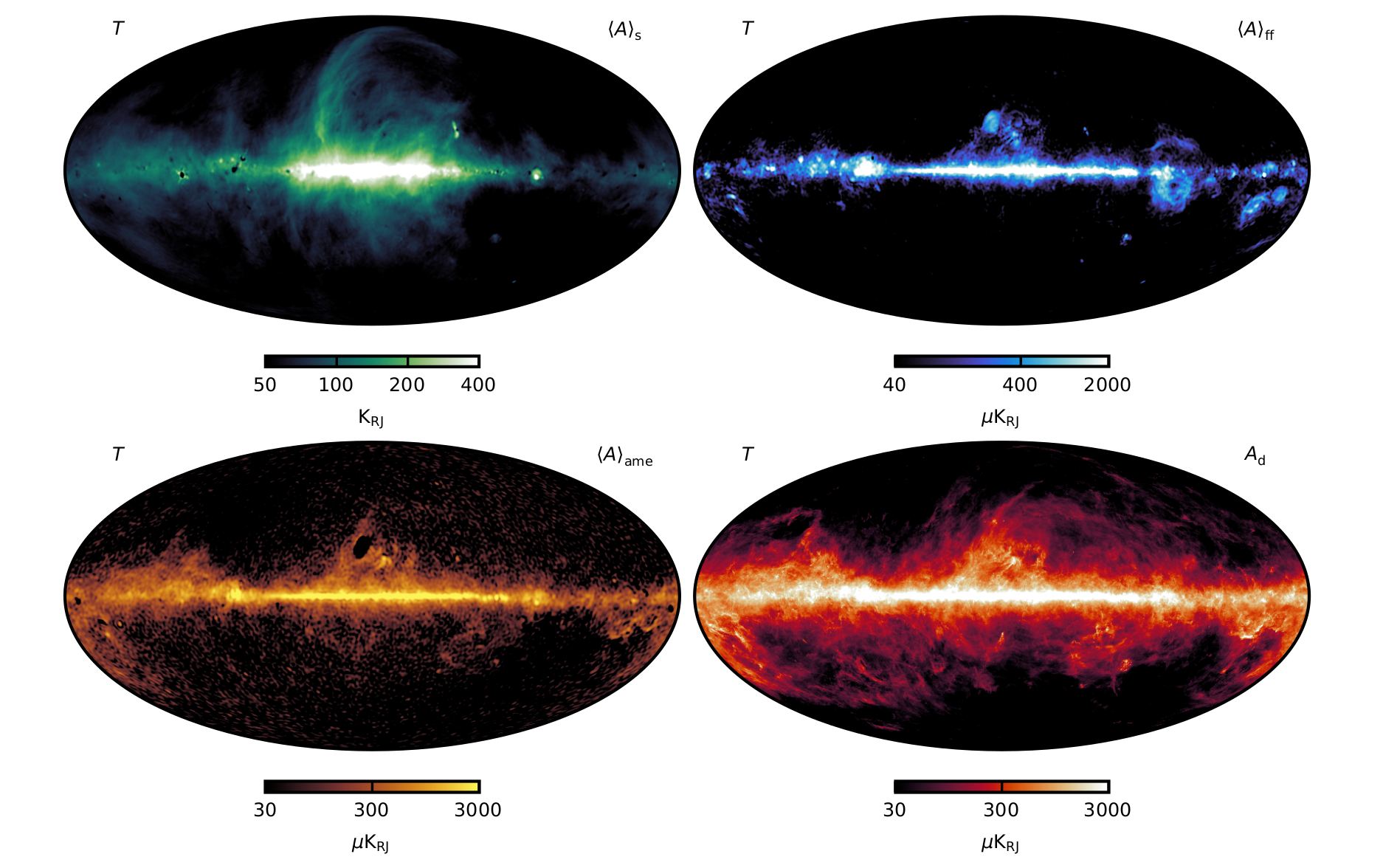

The detection of primordial gravity waves created during the Big Bang ranks among the greatest potential intellectual achievements in modern science. During the last few decades, the instrumental progress necessary to achieve this has been nothing short of breathtaking, and we are today able to measure the microwave sky with better than one-in-a-million precision. However, from the latest ultra-sensitive experiments such as BICEP2 and Planck, it is clear that instrumental sensitivity alone will not be sufficient to make a robust detection of gravitational waves. Contamination in the form of astrophysical radiation from the Milky Way, for instance thermal dust and synchrotron radiation, obscures the cosmological signal by orders of magnitude. Even more critically, though, are second-order interactions between this radiation and the instrument characterization itself that lead to a highly non-linear and complicated problem.

About the project

Our solution to this problem allows for joint estimation of cosmological parameters, astrophysical components, and instrument specifications. The engine of this method is called Gibbs sampling, which has been already applied extremely successfully to basic CMB component separation. The new and critical step is application of this method to raw time-ordered observations observed directly by the instrument, as opposed to pre-processed frequency maps. While representing a ~100-fold increase in input data volume, this step is unavoidable in order to break through the current foreground-induced systematics floor.

We applied this method to the best currently available and future data sets (WMAP, Planck, SPIDER and LiteBIRD), and thereby derived the world's tightest constraint on the amplitude of inflationary gravitational waves. Additionally, the resulting ancillary science in the form of robust cosmological parameters and astrophysical component maps represents the state-of-the-art in observational cosmology in years to come.

Commander3

Optimal Monte-carlo Markov chAiN Driven EstimatoR or simply Commander is the first real-world CMB analysis pipeline to adopt an end-to-end Bayesian approach.

First proposed in 2002, it took more than 15 years of computational and algorithmic developments to actually make it feasible. Therefore, the latest version - Commander3 - brings together critical features such as:

- Modern Linear Solver;

- Map Making;

- Sky and instrumental modelling;

- CMB Component Separation;

- In-memory compression of time-ordered data;

- FFT optimization;

- MPI parallelization and load-balancing;

- I/O efficiency;

- Fast mixing matrix computation through look-up tables.

Design philosophy

Recognizing the lessons learned from Planck, the defining design philosophy of Commander3 is tight integration of all steps from raw time-ordered data processing to high-level cosmological parameter estimation.

Traditionally, this process has been carried out in a series of weakly connected steps, pipelining independent executables with or without human intervention. Some steps have mostly relied on frequentist statistics, employing forward simulations to propagate uncertainties, while other steps have adopted a Bayesian approach. For instance, traditional mapmaking is a typical example of the former, while cosmological parameter estimation is a typical example of the latter; for component separation purposes, both approaches have been explored in the literature.

However, this approach is far from being efficient as it relies on a lot of (potentially error prone) human interventions. Thus, Commander3 adopts different path -- written in modern object oriented Fortran tuned for usage on High Performance Computing Centers, the code is automated to perform all necessary processing steps, starting from map making of raw time-ordered data to cosmological parameter estimation. Each independent step is coded as corresponding Fortran module which makes it highly extensible for new experiments. Moreover, using industry standard CMake build system, the code can be installed on any modern Linux based cluster machine.

Physically speaking, the single most important advantage of this uniform Bayesian approach is that it allows seamless propagation of uncertainties within a well-established statistical framework, from raw time-ordered data to cosmological parameters. This aspect will become critically important for future experiments, as demonstrated by Planck.

For most CMB experiments prior to Planck, the dominant source of uncertainty was noise; for most CMB experiments after Planck, the dominant source of uncertainty will be instrumental systematics, foreground contamination, and the interplay between the two. As a logical consequence of this fact, Commander3 adopts a consistent statistical framework that integrates detailed error propagation as a foundational feature.

Financing

This project is financed by Horizon2020 through the "bits2cosmology" ERC consolidator grant of €2M (2018-2022), led by Prof. H. K. Eriksen.

Cooperation

Commander3 development is led by University of Oslo in collaboration with many international partners, including:

- University of Milano

- INAF Trieste

- University of Helsinki

- Planetek Greece.