Among the open questions, LHC may reveal the origin of elementary particle masses (such as Higgs particle), explain dark matter (as predicted by Supersymmetry), investigate extra space dimensions and microscopic black holes, help understand the origin of matter in the Universe, and reproduce a new state of matter (quark-gluon plasma) that prevailed just after the Big Bang.

Several aspects of LHC physics touch on the interface between particle physics and cosmology.

LHC is back

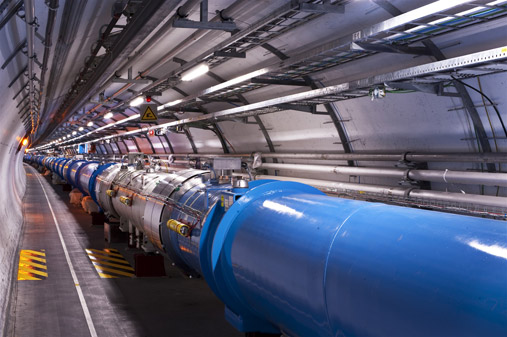

The successful circulation of first proton beams in the LHC (Figure 2) on September 19 2008 was unfortunately followed by an incident caused by a faulty electrical connection between two of the accelerator’s magnets. This resulted in mechanical damage and release of helium from the magnet cold mass into the LHC tunnel.

Figure 2. The LHC is the most technologically advanced scientific instrument ever built, allowing physicists to get insight into the basic building blocks of the Universe. The LHC is an underground accelerator ring 27 kilometers in circumference. Photo: CERN

After a year of repairs and detailed preparations, the LHC came back! The LHC went through a remarkably rapid beam-commissioning phase and ended its first full period of operation “on a high note” on December 16 2009.

First collisions were recorded on November 23 and over two weeks of data taking, the six LHC experiments, namely ALICE, ATLAS, CMS, LHCb, LHCf and TOTEM accumulated over a million particle collisions at 900 GeV.

Collisions recorded at 2.36 TeV on November 30 set a new world record confirming LHC as the world’s most powerful particle accelerator.

After a shutdown lasting two months, the LHC team succeeded in accelerating two beams to 3.5 TeV March 19 2010, and in colliding them March 30.

In November 2010, heavy ion lead-lead collisions reached 2.76 TeV per nucleon, another world record. This marked the start of the LHC physics research program.

High energies and high collision rates

The higher the beam energy and collision rates are, the greater the discovery potential.

How come?

To produce particles of large mass requires a lot of energy, according to the known Einstein relation E=mc2, stating the equivalence of mass M and energy E, related to each other by the speed of light c.

A mass of a proton corresponds to ca. 1 GeV. Two protons at rest have energyequivalent to ca. 2 GeV. The total LHC energy of 7 TeV, equivalent to 7000 proton masses, is due to the kinetic energy of the accelerated protons.

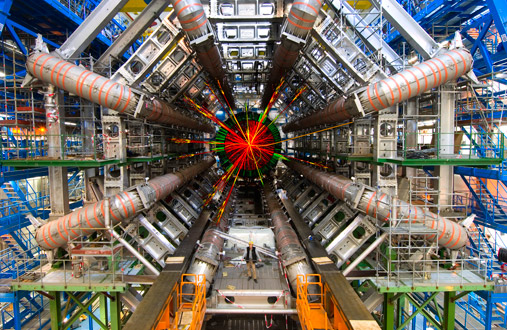

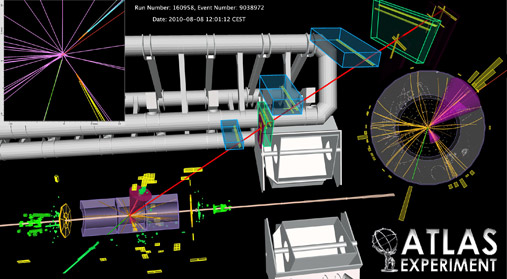

It is this kinetic energy that allows the creation of particles, known (top quark for Figure 3) and unknown (Higgs or dark matter if they exist).

Figure 3: This picture of a particle collision, which resulted in the production of a top-quark and its antiparticle, demonstrates that the ATLAS detector performs very well. The Norwegian scientists made a strong contribution to the semi-conductor tracker, one of the central detectors with the aim of identifying charged particles, such as electrons and muons. Other detector types – calorimeters – measure the energy of electrons, photons and hadrons (protons, neutrons, pions). All other particles have a too short lifetime to be observed in detectors. Those are inferred from their decay products with the help of conservation laws, such as energy and momentum. This is the case of the top quark and anti-quark, leading to an electron, muon and jets in the picture to the left. Illustration: The ATLAS experiment

An LHC collision leads to hundreds of particles measured by detectors, which can be thought of as a sort of digital cameras with millions of readout channels instead of pixels.

The higher the particle mass, the harder it is to produce it. This is where luminosity enters.

Luminosity gives a measure of how many collisions are happening in a particle accelerator: the higher the luminosity, the more particles are likely to collide. When looking for rare processes, this is important.

Higgs or dark matter, for example, will be produced very rarely if they exist at all, so for a conclusive discovery or disproof of their existence, a large amount of data is required.

A worldwide distributed computing system

The real data collected by the LHC experiments have been distributed smoothly around the world on the World-wide LHC Computing Grid (WLCG). Distributed data analysis quickly followed that allowed calibration of the various LHC detectors as well as first physics results.

The Nordic countries are committed to contribute computing and storage resources for the processing and analysis of data produced by LHC.

For this, they participate in the Nordic Tier-1 component of the WLCG collaboration, through the Nordic Data Grid Facility (NDGF). NGDF is the only distributed Tier- 1, with 2 of its 7 centres located at universities of Oslo and Bergen.

The mission of the WLCG is to build and maintain data storage and analysis infrastructure for the entire High Energy Physics (HEP) community around the LHC.

Raw data emerging from the experiments are recorded and initially processed, reconstructed and stored at the Tier-0 centre at CERN, before a copy is distributed across 11 Tier-1s, which are large computer centres with sufficient storage capacity and with 24/7 operation.

The role of Tier-2s is mainly to provide computational capacity and appropriate storage services for Monte Carlo event simulation and for end-user analysis.

Other computing facilities in universities and laboratories take part in LHC data analysis as Tier-3 facilities, allowing scientists to access Tier-1 and Tier-2 facilities.

Early in 2009 the Common Computing Readiness Challenge (CCRC) tested the Grid’s capacity to handle the high volumes of data and simultaneous event analysis, by running computing activities of all four major LHC experiments together.

It also tested the speed with which problems could be identified and resolved while running. Nearly two Petabytes of data were moved around the world, matching the amount of data transfers expected when LHC will be running at

high luminosity.

Rates of up to 2 Gigabytes per second leaving CERN for the Tier-1 sites were achieved. The test run was a success in terms of handling data transfer, providing computing power for analysis jobs, and quickly solving problems as they arose.

WLCG gets busy

Despite the highly successful tests, it remained to be seen whether the effects of chaotic data analysis by impatient physicists had been correctly anticipated.

During the period between October 2010 and March 2011, the LHC provided higher and higher luminosities for the final month of proton- proton running, as well as a rapid switch to heavy-ions and several weeks of heavy-ion data taking.

The technical stop from December to March was also very active for the computing system, with all experiments reprocessing their full 2010 data samples.

The Tier-0 storage system (Castor) easily managed very high data rates. The amount of data written to tape reached a record peak of 220 Terabytes/day. The bandwidth of data movement into the Tier-0 peaked at 11.5 Gigabytes/s, and transfer out peaked at 25 Gigabytes/s, in excess of the original plans.

The corresponding rates averaged over the entire year are 2 Gigabytes/s and 6 Gigabytes/s respectively. Two and four Petabytes/month were stored in the Tier-0 during the proton and lead runs, respectively.

The annual data stored during the first (very low luminosity) year is close to 15 Petabytes, which was initially estimated for the high luminosity running periods!

External networking performance (Tier-0 – Tier-1s) was 13 Gigabits/s with peaks at 70 Gigabits/s. With the rapid increase of data produced by LHC, an extension of the Tier-0 is foreseen outside CERN and Norway is one of the host candidates. The efficient use of an infrastructure such as WLCG relies on the performance of the middleware.

Another interesting development worth mentioning is the inclusion since May 2010 of the NorduGrid´s ARC middleware development, led by Oslo University, within the European Middleware Initiative (EMI, cofunded by EU and coordinated by CERN). EMI is the body that provides the European Grid Infrastructure (EGI), and indirectly the Worldwide LCG, with middleware based on ARC, dCache, gLite and UNICORE.

Experiments tuned for “surprises”

The LHC running conditions in 2010 have been so good that CERN decided to run LHC in both 2011 and 2012, before the machine will be upgraded to allow higher energies, up to 7 TeV per beam, and much higher collision rates.

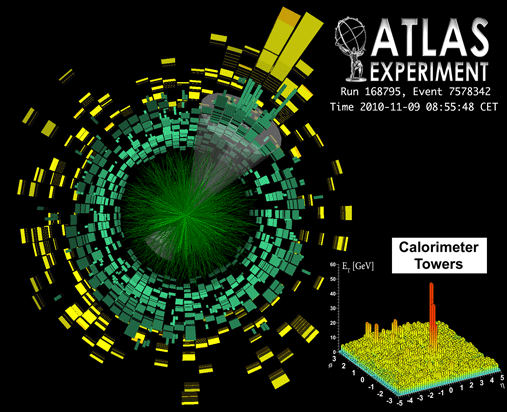

This ATLAS lead-lead collision event at 2.76 TeV leads to many recorded particles. When lead-ions collide in the LHC, they can concentrate enough energy in a tiny volume to produce a primordial state of highly dense matter, known as quark-gluon plasma. In Nature that surrounds us, quarks are confined in protons and neutrons, which are the constituents of nuclei that, together with electrons form the atoms. Quarks are held together by gluons, the mediators of the strong force. Quarks and gluons have never been observed as free particles.

Loosely collimated “jets” of particles are produced when quarks and gluons try to escape each other. In proton collisions, jets usually appear in pairs, emerging back to back. However, in heavy ion collisions the jets seem to interact with a hot dense medium. This leads to a characteristic signal, known as jet quenching, in which the energy of the jets can be severely degraded. It is this striking imbalance in energies of pairs of jets that is observedby ATLAS, where one jet is almost completely absorbed by the medium. ALICE and CMS confirmed this effect.

Billions of events were recorded in 2010 that led to the “re-discovery” and study of all known elementary particles, such as the mediators of weak interactions (W and Z particles) and the top quark, the heaviest of all. One surprise could come from the observation of “jet quenching” in heavy ion collisions (Figure 5).

Whether this has anything to do with the awaited plasma of quarks and gluons, a state of matter that prevailed some microsecond after the Big Bang, needs confirmation by ALICE, ATLAS and CMS … once more data are available. Then LHC will be confirmed as The Big Bang Machine!

On April 22, 2011 the LHC set a new world record for beam intensity at a hadron collider. This exceeds the previous world record, which was set by the US Fermi National Accelerator Laboratory’s Tevatron collider in 2010, and marks an important milestone in LHC commissioning.

As of May 10 2011, ten times more proton-proton data have been accumulated than in 2010. This is very promising. A long shutdown at the end of 2012 to prepare for an increase of the total energy towards 14 TeV will be followed by a long and smooth data-taking period.

LHC´s physics research programme has definitively started, WLCG will be very busy and physicists all over the world will have fun for the next 20 years or so.

There is a new territory to explore – the TeV scale – let us see with the LHC!